Cerebras Unveils new WSE-3 AI Chip

Launched 56x Larger than NVIDIA H100

Hey Everyone,

This is one of my “new” Newsletters, and over 10+ now covering various aspects of emerging tech. Thanks for the support, I hope to learn along with you about these topics.

Welcome to the fourth edition of Semiconductor Things™, where I seek to break down some of the news in the Semiconductor, AI chips and datacenter space globally to make it more accessible and easy to follow.

With the rise of Generative AI, Nvidia and TSMC and the geopolitical importance of Taiwan, this is now a major topic of me in my watching of the emerging tech space that I do as an analyst, writer, curators and news watcher.

The Technology category is an underdog relative to Substack’s baseline audience, this means I’m an outlier here. To survive, I’m building 10+ Newsletters in “emerging tech” coverage. A pilot never seen before on Substack for a one-person team. Full disclosure, I may not make it.

Importe note: I am not native to the Semi space, and it might take a while to get up to speed.

Why is TSMC moving faster in Japan and Germany than the U.S.?

As TSMC diversifies its global supply chain around the world, it’s moving incredibly fast in Japan as compared to the U.S. Asiametry does a fairly good job of describing why that is.

Nvidia’s Blackwell Generative AI Chip Architecture

While I’ve been covering the Nvidia GTC conference, a lot has been going on in the chips world. To get detailed info about Nvidia’s announcements read my deep dive.

In brief, the demand for Nvidia’s must-have H100 AI chips has differentiated it from the rest of the market made it a multitrillion-dollar company, now its Blackwell generation promise to level up its game.

Nvidia is about to extend its lead — with the new Blackwell B200 GPU and GB200 “superchip.”Nvidia’s next-generation graphics processor for artificial intelligence, called Blackwell, will cost between $30,000 and $40,000 per unit, according to CEO Jensen Huang. Just good luck getting your hands on them, even as getting H100s is still fairly difficult even in March, 2024.

GB200 Grace Blackwell Superchip and B200

How much Better than H100?

Nvidia is also making rather impressive claims. Nvidia says its GB200 Superchip will offer up to a 30x performance increase compared to the Nvidia H100 GPU for large language model inference workloads while using up to 25x less energy.

30x more performance

25x less energy

It will be incredible to see what LLMs will be able to do with more compute and likely leading to cheaper compute for the end consumer.

Chips, LLMs and Robotics?

Oddly Nvidia at GTC also showed off Project GR00T, a multimodal AI to power humanoids of the future.

I wrote a deep dive on that in case you are interested.

Semiconductor Bits & Bites 📱

I call Dan the Chip whisperer, crazy what you can notice when you are based out of Taiwan. TSMC is the center of the world in this territory.

For Nvidia, most of the H100 volume was due to Microsoft, Meta and other BigTech players. Will the same be true for B200? This is one of the big questions of 2024/25.

Nvidia’s new Blackwell GPUs will use TSMC’s CoWoS-L advanced packaging technology, which is for chiplets, while the previous GPUs, H100 and H200, used CoWoS-S, with differences mainly due to the make of the GPUs, media report.

Meta expects to receive initial shipments of Nvidia’s new flagship AI chip, the B200 Blackwell chip, later this year, Reuters reports, noting Nvidia CFO Colette Kress said of the B200 GPU, “we think we’re going to come to market later this year,” but volume shipments will not ramp up until 2025.

The new Nvidia Blackwell GPUs unveiled Monday are expected to ship later this year, Nvidia CFO Colette Kress said, as CEO Jensen Huang says he’s chasing a data center market potentially greater than US$250 billion and growing 25% per year, Reuters reports.

President Biden is expected to announce a multi-billion dollar CHIPS Act award for Intel on Wednesday (today) in Phoenix, Arizona, NYTimes reports, adding an unnamed White House official said the grant would be the first for several chip makers, including Samsung, Micron and TSMC.

Samsung Electronics has established AGI Computing Labs in both the USA and South Korea to spearhead efforts to “create a completely new type of semiconductor specifically designed to meet the astonishing processing requirements of future AGI,” said Dr. Woo Dong-hyuk, head of the new labs and a former developer of Google’s Tensor processors, media report.

Nvidia, TSMC, Synopsys are using Nvidia graphics processing units (GPUs), the cuLitho software library, and AI to transform a key step in semiconductor manufacturing (lithography) and cut production time, power use, costs, more.

Samsung Electronics expects US$100 million or more in sales of advanced semiconductor packaging this year, Reuters reports, citing co-CEO Kye-Hyun Kyung.

Nvidia is qualifying Samsung’s HBM (high bandwidth memory) chips and will use them in the future, Nikkei reports, citing CEO Jensen Huang

Bloomberg reported, citing multiple sources, that the Biden administration plans to provide Samsung Electronics with over $6 billion (approximately 7.96 trillion won) in subsidies.

Did you know?

Nvidia: Blackwell B200 GPUs are manufactured using a custom-built TSMC 4NP process, and have two GPU dies connected by 10 TB/second chip-to-chip link into a single, unified GPU.

I cannot stop thinking this week about Cerebras though.

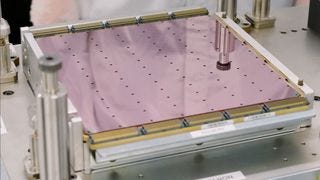

What’s special about Cerebras Systems?

However we were expecting all of these things, the news that caught me most off guard is what Cerebras is up to.

Cerebras Systems Inc. is an American artificial intelligence company with offices in Sunnyvale and San Diego, Toronto, Tokyo and Bangalore, India. Cerebras builds computer systems for complex artificial intelligence deep learning applications.

They have raised a total of $750M over 6 rounds.